If two numbers are separated by a dash (-), they represent a range of values, with the larger value usually for the thinnest plates of the armor type. Also, in this HTML document, font restrictions sometimes force the elimination of the accent marks used in several non-English words, such as the French gavre, though some, such as in the German grüson and härte, can be used (if the font allows).

- Material

-

Name of armor/construction material in common use at the time by the people using it. If "average" is in name, then the material is the average of two or more materials of the same kind made at the same time by more than one manufacturer for the same purpose, but for which separate data either is not known or is not important since the material is used interchangeably and which manufacturer's plate was used in any single case is not known.

- Country

-

Nation making this particular kind of armor/construction material.

- Company

-

Manufacturer of the armor/construction material.

- Time Frame

-

Years that armor was manufactured or used aboard ship, as relevant.

- Tensile1

-

Tensile Strength. Test sample's minimum slow stretching force per unit original cross-sectional area needed to tear the sample into two separate parts, in pounds/square inch. The higher that this is, the stronger the metal is against slowly-increasing, non-impact loads.

- Yield1

-

Yield Strength. Test sample's minimum slow stretching force per unit original cross-sectional area needed to make the sample permanently lengthen by 2%, in pounds/square inch. The higher that this is, the stronger the metal is against slowly-increasing, non-impact loads, but only against impact loads if the tensile strength is going up by the same percentage.

- Y/T1

-

Yield Strength To Tensile Strength Ratio. The closer to "1" that this value is, the less "give" the material has and more brittle the material will be if all else is equal.

- % EL1

-

Percent Elongation. Percent by which the sample's length had increased just as it snapped in two. The larger the value, the more ductile the sample.

- % RA1

-

Percent Reduction In Area. Percent of the sample's original cross-sectional area by which the narrowest point of the sample had shrunk just as it snapped in two. The larger the value, the more ductile the sample.

- Brinell

-

Brinell Hardness Number. Developed in the early 20th Century in Sweden as a measure of the resistance of a material to local deformation under a near-point stress, here a tiny tungsten (wolfram)-carbide ball under a 3,000 kg (6,614.4 lb) load (other versions of this scale exist, but this covers the largest range for hard materials). A formula for the size of the pit formed gives the Brinell Number, with wrought iron being about 100 (actually, 105 is the average) and circa 794 being as hard as the hardest pure cementite (actually, as the hardness goes above 650, the tiny ball begins to flatten out and the values give a greater difference than is actually there, while above 739 the tiny ball flattens out so = badly that it cannot be used). This is only one of several competing hardness scales, but one of the most widely used, so I use it in place of such possibly more accurate hardness scales Rockwell "B" and "C" (58 RB = 105 Brinell, 22.5 RC = 100 RB = 240 Brinell (usual cross-over point where RC replaces RB for harder materials), 65 RC = 739 Brinell) or Vickers Pyramidal (97.5 RB = 20 RC = 238 Vickers = 226 Brinell (minimum RC and Vickers), 251 Vickers = 240 Brinell, and 832 Vickers = 739 Brinell). Though Brinell testing is not usually used at such high hardness values, a hardness of 66 RC is roughly 757 Brinell, 67 RC is roughly 775, and 68 RC (highest RC used) is roughly 794 - these Brinell values would need a test ball harder than tungsten carbide to reach (diamond?). A slash (/) means "face maximum/back average" for face-hardened armors.

The hardness values given here are typical for the given plate type, usually with a range of about 20-30 up and down for a hard face and 5-10 up and down for the rest, centered on the given value. Many specification have step-values for metal properties at certain pre-defined thicknesses, complicating evaluations over the entire thickness range.

- Usage

-

Portions of the ship that the material is used on and why, including restrictions to thickness used, if any.

- Armor

-

Metal plate used primarily to keep enemy weapon effects outside of an area of a target, sometimes also being used as part of the construction material for building that portion of the target, but in many cases just an additional layer of specially-formulated resistive material placed on top of the separate construction material. Also called "protective plating" and sometimes only designed to protect against secondary effects such as blast or fragmentation, while at other times designed to resist direct hits by the enemy weapons expected to be used against it under specified conditions (gun range and Target Angle - direction target is moving compared to line-of-fire of gun at it (90 degrees is broadside-on) - for gun projectiles or aircraft altitude for bombs).

- Armor-Piercing (AP)

-

Projectiles designed to penetrate heavy armor (over circa half-caliber thickness) under a specified set of conditions with minimal projectile damage, so that the projectiles will cause the maximum expected damage to the target, such as exploding as designed if it has an explosive filler. The maximum impact obliquity and plate thickness allowing this gradually increased as time went on and metallurgical expertise increased. Explosive filler, if used at all, was very small (4% or less in World War I and 3% or less in World War II) and a base fuze was used, with or without a time delay element. Naval designs usually employed an AP cap after circa 1898 to allow them to remain unshattered against contemporary face-hardened armor.

- Ballistic Limit

-

Minimum striking velocity of a specific projectile against a specific plate under a given set of conditions (impact obliquity, etc.) that will allow the projectile to barely defeat the plate using its kinetic energy (not meaningful for projectiles that rely primarily on their explosive power to damage the plate hit), where the definition of "defeat" varies with the date, the nation/manufacturer doing the test, the armor type, and/or where on the target it is used - "Complete Penetration" or "Base Through" (U.S. "Navy" BL) or "Through Crack" (U.S. "Army" and British Standard BL through the end of World War II) or "Protection" (specified damage to a thin "witness" plate spaced a short distance behind the armor plate becoming the new post-World War II U.S. Army BL) being the three most widely used definitions of Ballistic Limit. "Nose Through" (tip of projectile nose extended past plate back surface into space behind plate) is another, less widely used BL, for example.

- Brittle

-

Failure of a material by sudden change from essentially no effect to total collapse in little or no time as the applied force goes above a threshold. Usually caused by the material having its yield and tensile strengths too close together, so that any yield at all immediately results in the unyielding portion of the sample next to the yielding portion having its burden increase past its tensile strength, snapping it apart, and starting an avalanche of failure as less and less of the object remains to try to support the entire load. If the material can "give" under the load fast enough, it can keep its net force below the tensile strength and not break or tear open until there is literally no more metal left to stop the force (soft taffy or high quality wrought iron can approximate this), which will prevent brittle behavior. Most materials have a maximum rate that a force can be applied before the object acts in a brittle manner. Brittle materials tend to have molecular bonds that cannot re-form properly once they are broken, unlike ductile materials where one molecule is considered just the same as any other and bonds break and re-form continuously as the material deforms under the applied force. Note that Iron alloys are somewhat temperature sensitive and older forms, especially up to the end of World War I, tended to get brittle when the temperature dropped below the freezing point of water, though this had less effect on the tougher, Nickel-alloy steels; most post-World War I steels were much better due to the reduction in the amount of impurities in the metal and tighter quality control, so that much lower temperatures were needed to cause any increased brittleness.

- Common

-

Projectiles designed for use against unarmored or relatively lightly armored targets, including aircraft and, in a few cases, submarines. May have a nose fuze and/or a base fuze, with the latter usually being used in those designs with no nose fuze and some armor-penetration capability (usually limited to about half-caliber-thick armor at most impact obliquities) and a smaller explosive charge (over 3% of total projectile weight in World War II and over 4% in World War I), called "Semi-Armor-Piercing" (SAP). Base-fuzed designs usually were very similar to armor-piercing designs, except for their 50-200% larger explosive charge and generally lighter construction (the larger the filler charge, the lighter the projectile body was). Nose-fuzed designs were of the large-filler "high explosive/high capacity" (HE/HC) type, with those dedicated to anti-aircraft use with time or, later, VT nose fuzes also called "AA Common." Base fuzes were always impact types ("Base Detonating" (high explosive filler) or "Base Ignition" (black powder filler)) using the inertia of a weighted firing pin thrown forward on impact to set off the sensitive primer, with or without an internal black powder short-delay element. A few SAP designs (British World War I 6-15" (15.2-38.1 cm) Common, Pointed, Capped (CPC) and post-World War I 8" (20.3 cm) SAPC; U.S. post-World War I 8" Mark 15 "Special" Common; and German post-1934 38 cm Spgr.m.Bdz.u.K. (14.96" High Explosive Projectile with Base Fuze and AP Cap)), employed AP caps for use against the (thinner portions of) face-hardened armor of many larger warships, but most did not. Some SAP-type Common designs, such as World War I British Navy CPC and U.S. Navy "Bombardment" (or, later, "Class B") projectiles, had very large fillers (circa 10%) equal to the fillers of the lightest HE/HC designs.

- Construction Material

-

Metal plate designed to support the ship portion that it is used in against normal forces due to gravity, ship motion, water pressure, equipment design, and so forth, usually without regard to protection from enemy weapon hits, though some extra-strong construction materials, such as U.S. Navy BuShips "Special Treatment Steel" (STS) or British "D"-steel, provided both.

- Ductile

-

Ability to be slowly stretched and twisted without cracking, finally tearing apart along a surface at right angles to the applied force when the molecules of the object can no longer hold the object together. Ductile materials can break their inter-molecular bonds and immediately re-form new bonds with other nearby molecules with little or no loss of strength as they deform under an applied force. Slowly-applied-force equivalent of tough.

- Face-Hardened

-

Armor plate surface impacted by the enemy weapon is hardened to a much higher level than the back surface of the plate in an attempt to cause such damage to the weapon that it has reduced penetrating power or impaired explosive capability or, hopefully, both. The actual method of hardening (quenching after manufacture or chilling during cooling from the original liquid metal state) and the depth and shape of the hardness contour inside the plate varies considerably from plate type to plate type and sometimes from plate to plate of a single type if poor quality control occurs. Use of this kind of armor must be restricted to cases where the damage to the enemy weapon caused by the armor reduces its penetration, which is not the case at high obliquity (shallow impact angle), where a weapon that stays in one piece is more likely to ricochet completely away with minimal target damage than one whose nose is broken off and thus whose middle body and base can continue to punch through the plate even after the nose has ricocheted off. Also, face-hardened armor fails by having the most of the armor in the projectile's path punch through the plate back where it acts as a second solid-shot-type projectile (sometimes in one huge piece, but usually broken up), increasing target damage; this is made worse by the fact that such a "plug" of armor can be ejected from a brittle face-hardened plate at striking velocities well below those where the projectile itself can penetrate the plate, which severely compromises the protection afforded by the plate.

- Hard

-

Ability to resist being permanently deformed by a slowly-applied or rapidly-applied force (depending on the test used) on a small area. Very hard materials are usually also brittle and suddenly fail over a large area when the applied force exceeds the shear or tensile strength of the material.

- High Explosive/High Capacity (HE/HC)

-

Projectiles of the "common" type that usually employed some kind of nose fuze (though most U.S. World War II HC designs could have their nose fuzes replaced aboard ship by a solid steel nose plug and used as rather weak SAP-type projectiles (see common) relying on their impact base fuzes against unarmored or very lightly-armored targets) and that had a very large explosive filler charge (4-10%). These projectiles were not designed to penetrate armor of any significant thickness, even when using a steel nose plug at near-right-angles impact, and usually had almost no penetration capability except for the power of their explosive filler due to their nose fuze virtually always being set off by any solid metal plate impact, even when a fuze other than an impact fuze (designed to be set off when crushed against the object hit) was used - only when a non-detonating black powder booster charge and/or filler was used with a strengthened nose fuze would the delay be long enough to allow the projectile to punch through a metal plate prior to the projectile exploding and even here the nose-fuzed projectile's body was usually so thin that only a near-right-angles impact against very thin plate would allow the projectile to keep from being broken apart during the penetration (e.g., British post-World War I 6" (15.2 cm) HE used an impact nose fuze with a black powder booster and could penetrate intact up to about 1" (2.54 cm) of homogeneous armor at near-right-angles). Many kinds of nose fuzes were used: Impact ("Point Detonating" or "Direct Action"), Powder (burning black powder (gunpowder)) or Mechanical (clockwork) Time (set off on firing the gun), mid-World War II anti-aircraft Variable Time (VT mini-radar "proximity" or "influence"), U.S. Navy post-World War I Auxiliary Detonating Fuze (ADF) (a unique safety-precaution second fuze inserted under the main nose fuze, which was only armed by the spinning of the projectile after firing and was normally set off only by the main nose fuze when it went off as designed), and even pressure-sensitive underwater anti-submarine fuzes). During World War II, the U.S. ADF fuze was found to be set off after a very short delay by impact shock against plates only slightly thinner than those that set off the projectile's base fuze, limiting these HC projectiles with steel nose plugs inserted when used as base-fuzed, delay-action SAP-type Common projectiles to completely unarmored or very lightly armored targets since the ADF, like the base fuze, was not removable aboard ship. Sensitive explosive fillers were used with such projectiles in many cases, since penetrating ability prior to filler explosion was not a major desired property.

- Homogeneous

-

Having the same metallurgical and physical properties everywhere within and on it.

- Scaling

-

Reduction in the ballistic limit of an armor type when all metallurgical properties of the plate and projectile, the projectile shape, the impact obliquity, projectile damage, and so forth are kept constant, but the size of both the projectile and the plate are increased by a given amount (i.e., a 3" (7.62 cm) projectile versus a 2" (5.08 cm) plate is replaced by, say, a 6" (15.2 cm) projectile and a 4" (10.16 cm) plate, both identical scale models of the first projectile and plate). Face-hardened armors have a scaling effect that increases rapidly with a decrease in the percentage thickness of the plate's unhardened back layer when the back layer goes below about 65% of the total plate thickness (due to brittle fracture of the hard face layers being a surface phenomenon, increasing only with the square of the scale (much slower than increased projectile and armor plug weight increase), while ductile deformation and tearing of the soft back is a volume-related phenomenon which increases with the cube of the scale, in step with increased projectile weight). The most ductile homogeneous armors only have a very tiny scale effect, but this increases as they get less and less ductile (due to reduced ability to stretch sideways to get out of the projectile's path before the armor splits apart, which is an increasing problem for the plate material near the impact center as the projectile gets wider), as measured by a decrease in the "Percent Elongation" from the circa 25% of the best ductile homogeneous armors, such as U.S. Navy World War II BuShips "Special Treatment Steel" (STS), to, for example, 18% Elongation for German World War II "Wotan Härte" (Wh) armor, which has a roughly 13.2% drop in the Navy BL for Wh plates when otherwise-identical projectile diameter increases from 8" (20.3 cm) to 14.96" (38 cm) against otherwise-identical plates scaled from, say, 6" to 11.22" (28.5 cm), compared to only a 1.4% drop for similar STS plates, though both plate types have virtually the same Navy BL (scale effects identical) against smaller projectiles. For homogeneous armors, the optimum ductility increases with absolute scale - due to the sideways stretch problem just mentioned, largely caused by factors such as the metal's speed of sound and crystal size that do not change with scale - and the 25% Elongation value seems to be the minimum to allow the minimum scale effects when against projectiles over 8". Relative scale effects due to a thicker or more oblique plate having a greater Navy BL at any scale also require that the plate have increased toughness for a maximum Navy BL, usually obtained by reduced hardness and increased Percent Elongation, on top of the absolute scale effects applicable to all plates. (See scaling under Factors Affecting Homogeneous, Ductile Plate Resistance for more information.)

- Shear

-

Force applied like a scissors so that it all lies in a single plane without the rest of the object being involved in the resistance to that force. Very different mechanical properties from tensile forces and much more susceptible to brittle failure. Cracks form by shear between two layers of molecules and anything that can divert or spread out the forces causing the crack at its tip can stop it in its tracks. Tough metals tend to have high shear strength, but the two are not always in step with each other; non-metallic fibers, for example. Materials fail by shear when their molecules break apart along a surface that is parallel to (actually, no more than a 45° angle to) the applied force, as opposed to ductile tearing. Materials weak in shear strength usually are also limited in that the break along the sheared surface cannot recombine with material next to it, as can most ductile materials, so any molecular splitting is permanent, making the material act in a brittle manner when the material finally fails under a load.

- Tensile

-

Force applied as a pull at each end of an object in a straight line so that it does not bend. In tests, the force is usually gradually applied to show both yield and tensile strengths.

- Tough

-

Resistance to cracking under sudden impact loading where the metal has minimum time to adjust to the force before it breaks or tears open. Usually considered the opposite of brittle. The Charpy and Izod toughness tests were developed after World War I to measure how tough a material is: They take a long sample, hold one end in a vice, put a notch or groove in the sample just above the gripping point and then hit the sample sideways just above the notch/groove with a calibrated swinging or dropping hammer so that the sample must fold sharply at the notch/groove. How hard the hammer must hit the sample before it breaks or tears at the notch/groove and the manner in which the failure occurs measures the metal's toughness - tough materials should fold virtually double before splitting in two, while brittle materials snap off like pieces of a china cup dropped on a hard floor. Toughness is dependent on temperature, where cold temperatures make the metal object more rigid and thus more brittle and shrinkage of the Iron crystals weakens the bond between them (see refrigeration for more details on this). The Charpy and Izod tests also give the energy needed to break the sample, which is an absolute strength parameter. I only consider toughness relative to the tensile and yield strengths of the material, where to me wrought iron is very tough (stretches considerably under load so it is hard to crack or break) even though it can be torn apart more easily than some stronger, but more brittle, materials.

Iron without Carbon or other alloying elements mixed in has a more-or-less fixed internal structure at room temperature and below of ferrite, which is unchanged by heat treatments and is only slightly changed by using mechanical working to reshape and/or resize its crystals; most of its quality variation is caused by impurities or poor smelting practice which allows holes and cracks to form inside the metal. The same thing applies to wrought iron, which has a negligible Carbon content (usually under 0.08%).

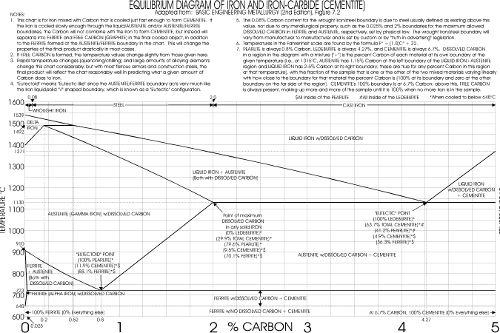

If Carbon over about 0.025% by weight is added (the maximum that ferrite can hold internally at any temperature) and mixed evenly into otherwise pure liquid Iron, things change radically. When ferrite is heated about 723° Celsius (1333.4° Fahrenheit), known as the Critical Temperature (which I will call the "Critical Hardening Temperature" or CHT to ensure no confusion with any other "critical" temperatures), doing it in a manner that the heat is spread evenly throughout the entire sample and all changes to the Iron have time to finish occurring ("equilibrium" conditions hold), the ferrite begins to change to another crystal called austenite, which can absorb up to 2% Carbon at 1130°C (2066°F) in the rather large gaps at the center or boundaries of each crystal "cell." With no Carbon in the mixture, it takes a temperature of 910°C (1670°F) to completely change to austenite, but this drops in almost a straight line with increasing Carbon content to the CHT when the Carbon content reaches 0.8%, which is the maximum amount of Carbon austenite can hold at the lower CHT. This 0.8% remains constant for all larger amounts of Carbon.

When the liquid Iron solidifies, it first forms austenite and when the hot solid austenite form of Iron cools very slowly through the the CHT it dissolves the austenite and forms into crystals of ferrite (more crystals than the austenite, if the size of the crystals are the same, since fewer Iron atoms are needed per crystal "cell" in ferrite). Ferrite has no available empty spaces in its crystals and cannot internally absorb more than about 0.025% Carbon (and this much only at the CHT) - the percentage of Carbon that can be held by ferrite steadily drops toward zero when the temperature is lowered to 648°C (1198.4°F) or raised to 910°C, as the crystals contract in size as the temperature lowers or gradually dissolve and change into austenite as the temperature rises to near the 910°C point, as mentioned above, so Carbon above 0.025%, as well as many other impurities, are either forced out of the ferrite crystals into the narrow gaps between the crystals as they grow when the temperature is lowered below 910°C (usually new crystals grow from the boundaries of the previous form of crystal, when not being formed directly from the liquid Iron state, since these discontinuities act as crystallization nuclei or "seeds," which allows the size and shape of old austenite crystals to modify the size and shape of new ferrite crystals and vice-versa as heat treatments proceed) or, if the temperature drop is fast enough, some or all of the Carbon is trapped in the forming ferrite crystals and the intense pressure as the Iron atoms try to form into ferrite causes the trapped Carbon atoms to chemically combine with the Iron atoms to form cementite - cementite is "metastable" in that if the temperature is again raised to form austenite, it begins to break up back into austenite and Carbon (the higher the temperature, the faster this breakup happens) and the Carbon can be re-absorbed by the empty spaces in the austenite crystals. However, the Carbon may have been physically moved by the austenite-to-ferrite change and no longer be evenly spread through the metal, so narrow regions near the old ferrite crystal boundaries may have too much Carbon to absorb immediately, while other areas have almost none - if one waits long enough, however, the Carbon will slowly move (diffuse) through the hot austenite and more evenly redistribute itself due to the Brownian motion (intense vibrations) of the hot Iron and Carbon atoms, which are no longer held in place by the Iron atoms; this is the basis of the hardening technique called cementing.

If the austenite with Carbon is cooled rapidly through the CHT, much less Carbon has time to move out of the forming ferrite crystals and much more of it forms cementite (extremely rapid cooling of Carbon-containing austenite can form a third crystal structure called martensite, but this is not formed at anywhere near the "equilibrium" conditions being discussed here), depending on the size and shape of the original austenite crystals, how much Carbon is in the mixture, and how fast the cooling occurs - other alloying elements added to Iron are primarily used to adjust the rates of change and final crystal structures to increase the ease (and/or reduce the cost) of manufacture (or even to allow some kinds of crystal structures to be created at all). Iron with Carbon between the minimum 0.025% (usually the practical minimum is set at 0.08% and any Carbon less than this is merely considered an impurity in the otherwise Carbon-free "pure" Iron) and the maximum 2% is called steel and is the most widely used and versatile form of Iron. Most of the following discussion is how to manipulate steel by heat treatments, alloying it with other elements beside Carbon, and mechanical working.

As the percentage of Carbon is increased from zero, the melting point of Iron drops rapidly in a nearly straight line from 1539°C (2802.2°F), nearly as high as Platinum and very expensive try to reach in a manufacturing plant, to 1130°C (2066°F) at very close to the 2% Carbon point, with only partial melting of the austenite occurring at temperatures above this line until a second, higher, nearly straight line of total melting occurs from 1539°C at zero Carbon to the 1130°C level at 4.27% Carbon, above which the melting point stays constant, at least through 5% Carbon, which is as high as we need worry about here. (Note: Iron initially has a third high-temperature crystal structure called "Delta Iron" if the Carbon content is below 0.52%, but this turns into austenite when the temperature drops below 1400-1492°C (2552-2717.6°F), depending on the Carbon content, so it is of no significance to us.) When put on a graph with temperature increasing vertically and Carbon content increasing to the right, both linearly, this mixed liquid/solid region looks like a shark dorsal fin curving up to the left with its tip at zero Carbon and 1539°C. When more than 4.27% Carbon is used at just above 1130°C, the extra Carbon solidifies (precipitates) either as free Carbon (graphite, described below) and ferrite or as cementite, leaving the remaining liquid at the 4.27% Carbon level (this need not concern us any more here). When less than 4.27% Carbon exists at just above 1130°C, down to the 2% Carbon point where solid austenite replaces all of the liquid Iron, some of the liquid will precipitate at austenite with 2% Carbon in it, again leaving some liquid with 4.27% Carbon. The amount of liquid left over in the 2-4.27% Carbon range just above 1130°C is simply directly proportional to how far the actual Carbon content is away from the 2% solid value - if the Carbon content is, say, 3.135%, which is exactly halfway between the 2% solid austenite and 4.27% liquid Iron points, then exactly half of the Iron is liquid and half is solid 2%-Carbon-containing austenite, mixed together in random blobs and swirls. This same rule works for any fixed-temperature level within the mixed solid/liquid region. Simply note the Carbon contents at the two boundary points where this region is cut by the horizontal temperature line and any solid in the mixture will have the Carbon content of the solid austenite at that boundary at that temperature and any liquid will have the Carbon content of its boundary point. The only thing changing will be the fraction of the metal that is liquid (the rest is all solid austenite) and that is found by calculating the ratio of (how far from the solid austenite boundary the Carbon content is) over (the Carbon content difference between the two boundary points). For example, 1315.6°C (2400°F) is the temperature of complete melting for 2.5% Carbon, while at this same temperature, the solid austenite boundary is at about 1.15% Carbon. If you have an Iron sample at 1315.6°C with, say, 2% Carbon in it, the solid austenite part has 1.15% Carbon, the liquid Iron part has 2.5% Carbon, and the percentage of the sample that is liquid is (100)(2-1.15)/(2.5-1.15) = 850/1.35 = 64%, with the rest solid austenite, all more-or-less randomly mixed together (the points where melting occurs are like points where crystals start: "seeds" where there is some difference from the surrounding points that makes it easier to change there).

Note again that the changes have to be made slowly enough for the material to adjust to them before an significant change occurs. In real life changes are made faster than this in most cases (time is money!), so some parts of the metal will change faster than others due to uneven heating/cooling or not enough time to allow complete diffusion or precipitation, but in many cases the rates of change are close enough to the ideal values given here to allow a good estimate as to what to expect at the end. When really fast changes are used, this is no longer true and more complex ways of diagramming the results are needed.

If the Carbon content is over 2%, the metal is called cast iron. It is made up at room temperature by ferrite, cementite, free Carbon, pearlite, and/or a combination crystal of cementite and pearlite called ledeburite. As with steel, if the cooling rate of the cast iron is very slow, a considerable amount of free Carbon is squeezed out of the Iron crystals into the gaps between them (forming grey cast iron made up mostly from ferrite crystals with Carbon "chips" in-between), while a more rapid cooling rate will retain more of this Carbon, creating cementite and, in turn, more ledeburite (forming white cast iron), which always contains 4.27% Carbon, essentially all in the form of cementite - this fixed 4.27% is the result of all other Iron and Carbon having already solidified out of the liquid prior to the ledeburite forming, keeping the liquid Iron at exactly 4.27% Carbon. Only this liquid at the 1130°C point will form ledeburite as it solidifies, so the percentage of ledeburite in the final object will depend only on the amount of liquid Iron - no liquid or ledeburite at 2% Carbon (all ferrite and cementite and/or free Carbon) and 100% liquid and ledeburite at 4.27% Carbon (the amount of ledeburite formed decreases above 4.27% as the amount of external cementite increases) - minus any ferrite and free Carbon formed from the liquid due to using a very slow cooling rate - with the part of an under-4.27% Carbon cast iron that was solid austenite being formed exactly like 2% Carbon steel under the same conditions, all swirled together to complicate things.

Cast iron is usually very brittle and not as strong as steel, but its low melting point of 1130°C allows cast iron to be rather easily liquified and poured into molds, creating many very inexpensive Iron products that would be hard to form by manipulating a solid piece of hot Iron - cast steel is also used for this reason, though it takes a higher temperature to melt it and only a rather small percentage of steel objects are made by casting. Cast iron is also a good material for handling compressive loads and damping vibrations, if they are not too violent, and this is why it has been used extensively for mounts supporting shipboard equipment from the deck. The main drawback for using cast iron or cast steel in armor is the inability to use any kind of mechanical method to alter the crystal structure after casting, since the object cast cannot be deformed, especially cast iron. As a result, the crystal structure tends to be even more coarse, irregular, and brittle than it otherwise would be, limiting further heat treatments to rather mild ones if cracking due to thermal stress is not to occur. Most cast armor is hardened directly from the molten state to prevent such stress build up (see chilling), but post-hardening tempering heat treatments are limited, since most cast armor is rather thick (which is one of the main reasons casting was used in the first place) and heat treating the center of thick objects is difficult even with more ductile materials. This makes the final cast steel or cast iron product even more brittle. Another point is that most post-hardening heat treatments result in at least some softening of the cast iron and even the hardest form of chilled white cast iron is too soft (well under 500 Brinell) to allow much softening if it to perform its function (it is possible to make a rather soft, ductile form of cast iron called malleable cast iron by reheating and extremely slowly cooling a white cast iron object, but this is not a process used with armor). Cast armor is only used when a somewhat lower grade product is acceptable and increased speed or reduced cost of manufacture is more important (for example, late- and post-World War II cast steel tank armor, though even here welded rolled steel armor was preferred) or there is no other way to manufacture the item (see Grüson chilled cast iron armor).

A crystal (also called a "grain") is made up of one or more elements or compounds whose atoms or molecules are placed in repeating sequences, so that inside a single crystal of the material the structure in one place is virtually identical to that in another, except for any imperfections or irregularities like missing atoms, extra atoms, twisted crystals where entire planes of atoms are missing or extra planes wedged into the crystal, and dirt mixed into the crystal - there are even crystals that have other crystals imbedded in them or that are formed by mixtures of other crystals. It is these embedded/mixed crystals that are the major forms of crystals in steel and cast iron and give these metals their many properties. Each repeating building block or structure is a "cell" and the entire crystal is made up of these cells repeating themselves many millions of times. Each crystal starts a some point where a "seed" makes that point somehow different from the surrounding material in such a way that it is slightly easier for the atoms of Iron and/or other element or compound to grab onto an adjacent atom or molecule and begin weaving the crystal structure outward until it runs up against the edge of the object or up against other similar crystals that are also growing outward from "seeds." Note that a seed can be almost anything, but that it is necessary to have a seed to start a crystal; if not, it is possible to super-cool a material well below the temperature that the material normally forms the crystals at and the material will still remain in its original state until something happens to force a point in the material to change its properties enough to act as a seed, at which time a very rapid crystallization will occur, sometimes allowing unusual properties not present when the material forms its crystals immediately at the time that the temperature went below the crystal-forming critical temperature. A rapid drop in temperature that is much faster than the rate of crystal formation can cause a similar effect, which is the primary method to harden Iron with Carbon.

The crystals that form are not oriented between themselves and are tilted and/or offset from each other in every possible manner. This, plus the fact that there will usually be small gaps, dirt, excess Carbon, and so forth at the edges of Iron crystals, makes the strength of the crystal internally usually much higher than the binding strength between separate, adjacent crystals. Therefore, making any kind of failure process of the material have to pass through as many different crystals as possible by reducing the size of the average crystal will toughen and strengthen the material markedly. Alternatively, making the crystals large so that they have the least number of boundaries that a crack or tear must jump across to split the material apart, will weaken the material and render it more brittle. If the crystals are made round, then the material will be rather soft, since the crystals can move past each other easily. If the crystals are irregular in shape and size, then some spots will be stress points where the crystals are pressing harder against one-another and some places on the crystal that are sticking out are liable to break off, reducing the strength compared to a force that must pass through the maximum volume of each crystal; both factors making the material much more brittle. If a kind of crystal is excessively hard and brittle, mixing it in the proper way with tough and ductile crystals can combine the best of both types and result in a material better than either one by itself. Therefore, by selecting the kinds of crystals properly and interlocking them in the optimum manner, many different properties can be achieved in Iron and steel alloys from the same basic building blocks. Actually doing this took many years of hard, trial-and-error work during the 19th and 20th Centuries and new steel manufacturing methods are being developed to this day.

The various defects in a crystal are formed, deleted, changed, and moved about during heat treatment and mechanical working and these imperfections give Iron alloys many of their best (and worst) properties. In fact, the reason a yield point exists is that these defects move as a crystal deforms and tend to jam together, locking their atoms in place, until the force gets strong enough to tear them free - each tiny crystal thus deforms in small jumps, being locked into a fixed shape in-between. Ductile failure is smooth only when viewed at a distance. A metal object made from a single perfect crystal would have properties different from the same object made using the regular compositions, forms, and sizes of crystals now in use. Crystals near the boundary conditions where they form or dissolve (depending on the direction of changing temperature) will grow until they run into one-another and then they will continue to grow by cannibalizing each other - one crystal stripping adjacent crystals of their atoms and adding them to its outer edge - until, theoretically, a single huge crystal could form, though very slowly in most cases (small single-crystal metal objects, such as jet engine compressor blades, are manufactured today due to special techniques that speed up crystal growth, but limit it to only the "seed" that the manufacturer has put into the mold to start the crystallization process). This crystal growth is another limitation put on the time that certain heat treatments can be applied, if crystals of the wrong size are not to result. The effects of alloying elements on this factor, such as slowing or speeding up this process, is another reason that they are used.

Body-centered cubic structure. This means that each of its cells is composed of a cube of eight Iron atoms in the corners, with a ninth Iron atom in the exact center of the cube. Each corner Iron atom is the corner of seven more adjacent cells (except if the atom is at the edge of the crystal) and these cells stretch in all directions until the edge of the crystal is reached. This structure is very isotropic (the same strength in all directions) and the Iron atoms merely reform new cells if the crystal is deformed and any existing cells are broken, allowing the crystal to be very tough (in the sense of being crack-resistent>, not in the sense of being hard to deform, since wrought iron, which is almost all ferrite, is relatively easily pulled apart) and ductile and only split apart when the deformation physically separates one part of the crystal from another part, which merely results in two smaller, stretched ferrite crystals where one existed before. Brinell hardness of about 80-100, depending on crystal size/shape. Can internally contain up to 0.025% Carbon at 723°C, but none at room temperature.

Face-centered cubic structure. This means that eight Iron atoms make up the corners of each cell, as in ferrite, but the center is empty, allowing small atoms like Carbon to fit inside, though when one does it enlarges the cell somewhat and makes the similar openings in adjacent cells more tightly closed, limiting the number of Carbon atoms allowed inside to a maximum of 0.8% of the total weight of the metal at 723°C and a maximum of 2% at 1130°C due to the thermal expansion of the metal opening the cell holes enough for more Carbon to fit before they squeeze close the remaining holes. Instead, each of the six sides of the cell has a fifth Iron atom at its center in an "X" pattern, so the cell contains 14 Iron atoms rather than nine (fewer cells per volume of crystal than ferrite) and the cells are each somewhat larger (further reducing the number of cells per volume of crystal). This crystal is the densest form of Iron. The crystal is just as isotropic as ferrite, but it only exists normally at a higher temperature, so it is even more ductile. Many steel mechanical working processes are done to Iron in its austenite phase since only here is it soft enough due to the elevated temperature to do so. Brinell hardness of about 110 (estimated).

A compound of Iron and Carbon, Fe3C, formed by internal pressure when austenite turns into ferrite and it will eventually turn back into ferrite or austenite and free Carbon if it can, though it can remain stable for a time inside austenite at just above the CHT (the higher the temperature, the faster it breaks down into austenite and Carbon). At below the CHT, it can remain stable indefinitely in most cases, though some forms of Iron will gradually "age" and change their internal structure as they undergo stresses from their own weight or from applied forces as they are used. Contains 6.7% Carbon by weight. Each cell is made up of a triangular pyramid with the three Iron atoms forming an equilateral triangle base and the Carbon atom forming the top point of the pyramid. These pyramids are interlocked by bonding of the Iron and Carbon atoms in adjacent crystal cells into an intricate pattern of interlocking diamond shapes of Iron set into a rectangular array of Carbon atoms. This multi-facetted array of triangular shapes is extremely rigid, strong, and hard, but also extremely brittle. It has planes of weakness and once a Carbon/Iron or Carbon/Carbon bond breaks, it usually cannot be restored, allowing cementite to suffer catastrophic avalanch-type failure if the applied force ever exceeds the yield strength of the material anywhere within it. Cementite supplies the primary strength of steel and cast iron, while the ferrite supplies a cushioning and support role to keep the cementite from being directly impacted - much like even a single layer of paper can prevent brittle glass objects from breaking when they touch each other during shipping, while without the paper even a seemingly slight impact can cause the glass to shatter. Cementite is white in color. Brinell hardness of about 794 (an extrapolated value equal to 68 RC, the highest RC value).

This is the only other form of Carbon in steel or cast iron and it usually is in the form of graphite, an extremely soft, weak, and slippery form of Carbon that is used as a lubricant and has some of these same properties in steel and cast iron when it forms. It can make cast iron and steel more brittle when compressed between irregularly shaped crystals, as in grey cast iron, but it can have the reverse effect if it forms between rounded crystals where it is free to move when put under pressure. For example, malleable cast iron (never used in armor) is made from hard white cast iron by heating the object to 927°C (1700.6°F) - well above the CHT but still solid - and holding it there for 50 hours until much of the Iron and Carbon has separated and then very slowly cooling it. It has many of the properties of low-Carbon steel (except for the steel's strength) even though it has a large amount of Carbon in its structure, almost all of it as large lumps ringed with thick ferrite shells sprinkled within a matrix of rounded pearlite crystals, while retaining the advantages of the low melting point of the original white cast iron when originally shaping the object by molding rather than by hammering, rolling, or forging. On the other hand, grey cast iron is made of very slowly cooled cast iron directly from the liquid state, so that the Carbon and Iron only partially mixes together as the metal solidifies and continues to cool very slowly through the austenite phase and past the CHT to the ferrite phase; most of the Carbon remains in the cracks between the ferrite crystals in strips and moderately large chunks or chips, where it renders the metal very brittle without increasing the hardness or strength of the metal very much, with only some pearlite being formed from the Carbon that did mix with the Iron as it cooled.

Crystal always containing 0.8% Carbon by weight - in the form of 88.1% ferrite and 11.9% cementite in an interleaved pattern similar to straight zebra stripes. It forms naturally in slowly cooling steel and cast iron. The ferrite and cementite complement one-another to make this a very good basis for making strong, yet ductile and tough, steel. Named after the way the tiny layers act as a diffraction grating to give a rainbow tint to the material under a microscope. Hardness increases as the original mixture's Carbon content or the cooling rate increases, as long as the transformation temperature does not fall below 538°C (1000.4°F), where another crystal bainite replaces it. As the 538°C boundary approaches, each pearlite crystal formed is harder and has thinner and more numerous stripes until at the boundary the metal seems to be a uniform grey color. The fixed 0.8% Carbon content is due to that being the Carbon content of austenite just above the CHT for the same reason that ledeburite has 4.27% Carbon: Above 0.8% Carbon, the excess Carbon will precipitate out of the austenite as cementite or free Carbon, though at a much slower rate than with a liquid/solid transformation, while below 0.8% Carbon, but above the 0.025% Carbon that ferrite can contain at the CHT, ferrite will slowly precipitate out until only 0.8%-Carbon austenite is left. Unless the cooling rate is so slow that ferrite and free Carbon form instead of cementite, pearlite will be formed directly from the austenite as the ferrite portion forms, coated with the extra ferrite, if under 0.8% Carbon, or extra cementite, if over 0.8%.

Crystal made up of pearlite lumps imbedded in a matrix of cementite. Nearly white in color. Contains 4.27% Carbon by weight. Forms the major component of white cast iron and is created by very rapidly cooling Iron with over 2% Carbon from the liquid state using a chilling process of some sort to prevent the formation of grey cast iron, which is created directly from liquid Iron by very slow cooling by breaking up cementite into ferrite and free Carbon. Hard, but the existence of the relatively soft pearlite within it reduces the hardness compared to the cementite and martensite of high-Carbon steel that has been quenched in an optimum manner. Rather brittle, but less brittle than grey cast iron due to the extensive pearlite content. When the cooling rate is relatively slow, the pearlite lumps are rounded and look a lot like watermelon seeds, alternating with long zebra-stripes of pearlite, imbedded in the surrounding cementite matrix. When a very rapid chill is used, the stripes replace the seeds and the stripes get very thin and bunched into bundles that look like many black and white toothpicks of varying sizes packed together parallel to each other. The bundles from different crystals are jammed into each other at various angles, so that the white cementite is not obviously the surrounding medium (matrix) and any cementite and ferrite formed prior to the chill from the original liquid (when the Carbon content is not 4.27%) are bound tightly with the new cementite and ferrite formed inside the ledeburite to the point that they all seem to be the same. Makes up most the face layer of Grüson chilled cast iron armor, which probably had about 4% Carbon (typical). Brinell hardness can be from about 425-500, with the deep chilling needed for the Grüson armor requiring a rapid surface cooling that would tend to give the surface a higher hardness, estimated circa 475 Brinell.

Similar to pearlite when viewed under a microscope, and having a similar range in Carbon content, though somewhat more random in its striping (more like a leopard than a zebra). Occurs when the cooling rate is fast enough so that the transformation from austenite occurs in the range 260-538°C (500-1000.4°F), with the lower the temperature, the more irregular, finer-grained, and harder it becomes. Near the 538°C boundary where pearlite stops forming, bainite is more brittle than pearlite without being much harder - this is called upper bainite due to the higher temperature of transformation - note that temper brittleness occurs in the 371°C (699.8°F) to 650°C (1202°F) range, which neatly boxes in upper bainite, indicating that they may be related. The 260°C boundary is where martensite forms instead of bainite. Just above this boundary, the form of bainite is termed lower bainite and it has many of the properties of martensite and is even used in martensite's place when brittleness is not a major criteria, using a hardening technique called austempering. Bainite has its cementite grains arranged in an elongated "fern-leaf" (radiating rib-like) pattern - with the lower the formation temperature, the smaller and finer the pattern - that pattern does not allow complete spheroidization, so tempering it does not work as well as with martensite. Bainite can also form when the quenching process is not carried out long enough to drop the temperature below the 260°C boundary to fully form martensite. Because upper bainite is relatively brittle without any advantage over pearlite, it is not desired in any kind of armor. The formation of upper bainite in the center of very thick plates of Japanese Vickers Hardened non-cemented face-hardened armor due to the use of the old pre-World War I (circa 1910) Vickers version of the Krupp cemented hardening process, which was never designed for such massive plates, caused them to split in two on impact, giving a perfect example of why this crystal structure was not desirable.

If the Iron above the CHT is suddenly cooled to 260°C (500°F) or less, its atoms cannot move prior to being frozen in place, so the austenite face-centered cubic structure remains. This also occurs at higher temperatures when the Carbon content of the metal is significant, but the time that it takes for the austenite and Carbon to change to the final lower-temperature form (pearlite or bainite or white martensite) varies enormously. Assuming the simplest case of 0.8% Carbon by weight, when the temperature is held barely below the CHT will it will take a long time (many hours) for austenite to change to ferrite, resulting in pearlite when it finally happens. When the temperature is dropped very rapidly to about 570°C (1058°F) and held there (the transformation temperature or "Hold Temperature"), it only takes about 0.8 second to begin to change to pearlite and 4.8 seconds for it to completely change. When the highest bainite temperature is reached as the Hold Temperature - 538°C (1000.4°F) - this is not changed by much, but as the Hold Temperature drops further, the time before transformation starts begins to increase again, but much more slowly so that it is only about 35 seconds at 260°C (500°F), the lowest bainite temperature, while the time to complete transformation to lower bainite at 260°C increases to almost 20 hours. When the Hold Temperature goes down to just below 260°C, a strange thing suddenly happens: The transformation from austenite stops happening and it will remain austenite indefinitely! As the Hold Temperature continues to go down, martensite begins to form instantly on reaching that temperature, with a fixed percentage of the austenite changing to white martensite at each Hold Temperature, no matter how long the temperature remains there. As the Hold Temperature continues to drop, the percentage of white martenite increases until all of the austenite is changed to white martensite instantly on reaching -73.3°C (-100°F). (The percentage of white martensite that is created is not a linear relation with decreasing Hold Temperature, but increases slowly near 260°C and -73.3°C and increases very rapidly in the middle, with 93.3°C (200°F) being the 50% transformation point.) The percentage of retained austenite at room temperature is about 20% - to eliminate this requires dropping the temperature of the object the rest of the way to -73.3°C for a short time to change it to white martensite or going back up to a near-CHT tempering temperature to change it to pearlite. Since austenite has a cell of about 3.57A (angstroms, each one-hundred-millionth (10-8) of a cm) on a side (larger when heated to near-liquid temperature) and ferrite only has a cell size of 2.87A, the force on this structure as it tries to turn into ferrite is enormous, greatly increasing the brittleness of the metal. If the retained austenite is at room temperature after a quench to form white martensite, it also has the property that it will change spontaneously to white martensite, an even more brittle material, if the temperature drops (winter setting in or moving the object aroundin the ocean to near the North or South Pole), so the object is unstable from that angle also. The lower the Carbon content of the steel, the faster the initial temperature drop rate must be to form martensite and the more difficult this is to achieve, so the smaller the proportion of white martensite and the larger the proportion of retained austenite. The process of "tempering" has as one of its major goals the removal of this "retained" austenite so as to toughen and stabilize the metal. Note that some alloying elements lower the CHT to the point where austenite forms at room temperature regardless of the cooling rate, which obviously eliminates most heat treatments. This form of metallurgical property and timing modification by using alloying elements other than carbon is the reason for using them in the first place.

Martensite has two forms: Fresh or white martensite, formed by the initial strong cooling to 260°C (500°F) or below from austenite, and tempered or yellow martensite, formed by a breakdown of white martensite into cementite and ferrite in a unique pattern. White martensite forms under the same conditions as retained austenite, with virtually none forming at the 260°C temperature (all retained austenite, instead) and a steady increase in percentage as the temperature of transformation drops until virtually all of the austenite turns into white martensite at -73.3°C (-100°F); this transformation is essentially instantaneous, unlike the transformation of austenite to pearlite or bainite, which may take some time if not assisted by various alloying elements (see retained austenite). White martensite is a face-centered tetragonal crystal, which has each cell consist of a rectangular prism (not a cube) 2.84A by 3A (for a 0.6% Carbon steel by weight; both axes get larger as the Carbon content of the crystal increases) - compared to austenite's usual cube of 3.57A (larger when Carbon is mixed in with it) and ferrite's 2.87A - made up of the eight original corner austenite Iron atoms forming a "cage" around a diamond shape made of the austenite's original six center-face Iron atoms now chemically bonded to the central Carbon atom that was in the austenite cell when cooling began (cells without the central Carbon atom also become white martensite but they are not chemically bonded, just locked into the shape by their chemically-bonded neighbors) - this diamond shape, longer than it is wide, is also one of the main components in cementite, so white martensite cells with Carbon in them can be viewed as cementite being formed inside of a ferrite crystal. The lower the Carbon content, the fewer chemically-bonded cells with Carbon in their centers exist and the more difficult it is to lock the white martensite shape into the crystals, so the faster the initial temperature fall must be to freeze in the austenite cell shape on which the white maternite cell shape is based. Conversely, increasing the Carbon content allows a slower quenching/chilling time to reach the white-martensite-forming temperature range desired and results in an increased hardness (more white martensite forms) for a given cooling rate. Various alloying elements, such as Chromium and Molybdenum, help to do this too, which is the only reason that high hardening levels used in deep-faced, KC-type face-hardened armors - which use rather large amounts of Chromium and, sometimes, Molybdenum - are practical with low-Carbon-content (necessary for adequate toughness) armor steels. White martensite is even more unstable than retained austenite and as brittle as glass and it will gradually break down into tempered martensite, though the rate of breakdown can be very slow - this may be a good trait in old Japanese swords, but not in production armor! By reheating the retained austenite/white martensite combination to near, but below, the CHT and holding it there for a time that depends on the temperature and the thickness of the object, both the retained austenite and the white martensite can be very rapidly changed to pearlite and tempered martensite, respectively, as well as allowing the metal to get the other benefits of tempering. Tempered martensite is much less brittle than white martensite and its crystal structure has oval lumps of cementite of many sizes formed into a series of rows (with very small cementite lumps randomly sprinkled around the rows) in a ferrite matrix (surrounding material), somewhat similar to pearlite with very fat, broken-up cementite layers - the higher the Carbon content, the higher the percentage of cementite and the harder the final product for a given cooling rate, though the hardness increase slows as the Carbon content approaches 0.8% and above, where the maximum possible hardness reaches an extrapolated 794 Brinell. This value (equalling 68 RC) can be compared to the absolute maximum Brinell Hardness Number that can be measured of 739 (65 RC) and the usual maximum value of 700 Brinell (62.8 RC) attainable in any repeatable production process and it is rarely attempted; the cemented layer's surface hardness in Krupp's cemented (KC a/A and n/A) and Italian Terni cemented armors (see WWI and post-1930) is, to my knowledge, the only time that even the hardest face-hardened armor has reached 700 Brinell and even here this was done only in the 1-1.5% Carbon by weight cemented layer by special processing and it was above the average of 670-680 Brinell (61-62 RC) maximum for most plates made by Krupp and well above the average of 650 Brinell (60 RC) maximum used by most other manufacturers of KC-type armor, with the lowest cemented surface layer hardness being in British World War II cemented armor and many World War I-era face-hardened armors, with 575-600 Brinell (RC 58) maximum. Increasing the amount of Carbon above 0.8% makes the steel easier to harden to a given level, but it also makes its much more difficult to toughen the metal to an acceptable level (more cementite), so armors never used over 0.55% Carbon - the average was 0.3-0.4% Carbon - except for the thin cemented surface layer of Harveyized mild- or nickel-steel and KC-type armors, which was immediately destroyed by the projectile impact, anyway. By using other alloying elements - Chromium and sometimes Molybdenum and, very rarely, Vanadium - to enhance the steel's hardenability (wringing out the maximum effect for a given amount of Carbon) and a lot of Nickel to make the steel tougher, rather high hardness was possible without increased brittleness due to using more Carbon.

Hardening cast iron and steel is done by rapidly cooling the object being made either in its entirety or on one surface of the object to harden the portion being so cooled due to the formation of higher hardness (and higher strength, though also higher brittleness) forms of the various crystal structures mentioned in I. Crystal Structures, above. Some of these heating and cooling techniques, such as tempering, can reduce hardness and increase toughness, so making steel is a sometimes complicated process of many steps.

Cast iron and cast steel are hardened usually directly from liquid state by using a regular insulating mold for the portions of the object to be kept soft, so that these portions only gradually cool down to grey cast iron or pearlitic steel, while those portions to be hardened into white cast iron or martensitic steel are shaped by a mold made of a material that is highly conductive to heat and which is kept cool by having water or other material on its far side to carry off the heat - wrought iron or very low-Carbon steel is used for the "chill", as the portion of the mold that is conducting is called, in most cases due to its high melting point and its ability to retain its original shape until the object being cast has cooled down. Grüson chilled cast iron armor was the only major face-hardened armor made this way, but various small armor-steel fittings (cowls protecting sighting and range-finding gear sticking out of turrets, for example) have been made using chilled, face-hardened cast steel (though usually even these were kept homogeneous to reduce brittleness). The control of the temperature deep in the object being cast is not as good as with other cooling methods, so chilling is only employed when the details of the process are not critical and a relatively wide tolerance is allowed in the final results - the exact depth of face in the Grüson armor was not critical and matching an exact hardness pattern also was not necessary, since there is a rather wide range of values for the hardness and depth of face that give virtually identical results in face-hardened armor.

Used on forged, rolled, and hammered steel to be hardened after previous mechanical and heat treatments have shaped the object to essentially its final form (only a small amount of machining for a tight edge fit with an adjacent plate in the case of armor and so forth will be done after hardening) and adjusted its crystal structure to that considered optimum (or at least acceptable) for the final product. Part or all of the object is heated above the CHT and then rapidly cooled by one or more of the following methods: Dipping the portion of the object to be hardened in hot or cold water; spraying that portion and, to prevent cracking due to thermal stress, usually the rest of the object with high-pressure water; dipping it into hot or cold oil; and dipping it into molten lead. These various methods control the final temperature (highest in lead and lowest in cold water) and the rate of heat removal (highest in cold water or, for large, thick objects, with the water spray - since steam formed can interrupt the cooling rate and the spray keeps the steam cleared away - and lowest with molten lead). For some objects, including some forms of face-hardened armor and most armor-piercing projectiles, several methods were used to reach the final hardness pattern and crystal structure.

This is the opposite of chilling and quenching; here the object is softened to prevent cracking or to improve machinability by forming less cementite from the available Carbon. The thoroughness of this effort varies considerably. Normalizing is a form of mild annealing where the object is heated to a fixed temperature above the CHT and then allowed to cool down in open air by itself until it has reached a relatively low temperature (usually room temperature). This gets rid of many of the stress points in the metal after initial pouring and shaping and is usually the first step in the final manufacturing processes to turn the object into its final form - sometimes this step is repeated several times between various manufacturing processes to return the metal to a known baseline state. Full annealing is a deliberate, usually long-lasting, process of heating to very specific temperatures and then slow cooling in one or two ovens to specific final temperatures to ensure that the metal changes its structure at very specific temperatures, which controls crystal (grain) size and the softness, ductility, and toughness of the final product. This is the technique used on white cast iron to form malleable cast iron, for example, and it is an exacting process. Unlike quenching or chilling, these techniques even work with wrought iron, since stress points in the crystal structure can exist without anything to do with Carbon and thus annealing can reduce brittleness and make a more uniform final product.

By either packing a steel plate's face tightly against bone charcoal (the original "Harveyizing" technique also used by most makers of versions of Krupp cemented-type armors) or by continually spraying the face with methane ("illuminating") gas (Krupp's version of this process also used by some other manufacturers), in either case in an air-tight oven raised to well above the CHT, the carbon would slowly soak into the austenite to a depth of about 1" (2.54cm) or slightly more over a period of about 2-3 weeks, raising the carbon content to about 1-1.5% by weight, which can easily be quench-hardened to the hardest kind of martensite afterward. Introduced in armor as Harveyized nickel-steel armor developed in 1890-91. Increased the surface hardness of any Iron or steel material, including Mild Steel, to 575-700 Brinell with no significant change to the rest of the plate after quenching the face (see Harveyized mild steel armor). Also known as case hardening or carburizing and widely used to get wear-resistant surfaces on items like ball bearings. Cementing is a very old method for hardening iron objects, originally using the technique of burying the object in the hot coals of the wood-burning furnace and leaving it there with the furnace kept at a high temperature. Krupp and some other early manufacturers tried to get a maximum surface hardness of circa 650-700 Brinell, but most manufacturers prior to 1930 were content to get a cemented layer of only 575-625 Brinell. After 1930, the average cemented layer hardness increased toward the Krupp value as more manufacturers became better at toughening the armor. However, after 1930 British World War II cemented armor was made on-purpose with a circa-600 Brinell cemented layer to minimize brittleness even in this sacrificial surface layer (it was always destroyed during an impact, whether or not it succeeded in damaging the projectile's nose). The success of this soft-cemented-layer British World War II cemented armor and Japanese World War II Vickers hardened non-cemented, face-hardened armor shows that the deep, decrementally-hardened KC-type armor face was much more important than the thin cemented layer when high-quality, hard-capped AP projectiles came into widespread use after World War I - Krupp, for one, retained this thin layer because of "tradition", not because it was still needed in its World War II KC n/A armor. However, thin high-strength steel armor used by armored cars and the like retained cementing as the primary face-hardening technique through the end of World War II as the entire face was essentially the thickness of the cemented layer by itself, anyway.

This is a special form of quenching/chilling designed to get a deep, gradually-softening face on a face-hardened plate. Grüson Chilled Cast Iron armor formed its face using the chilling process, which was possible because of the very high Carbon content of the cast iron allowing rather rapid hardening. However, armor steels use rather low amounts of Carbon to prevent brittleness, so deep hardening is only possible by the use of alloying elements - primarily Chromium, though Molybdenum was used extensively after World War I in addition to Chromium - that slow down the transformation of austenite to ferrite, allowing martensite to form deeper in the plate where the cooling rate is slower, and that form additional carbides to increase the hardness by more efficiently using the existing Carbon. Nickel was also of use here, since it toughened steel considerably and allowed higher hardnesses to be used while keeping the toughness above the minimum required.

This process applied to steel was introduced by Krupp in 1894 as his famous Krupp Cemented face-hardened armor (later called Krupp cemented "old type" in Germany after World War I to separate it from the post-World War I improved KC "new type" ("KC n/A") developed by Krupp during the late 1920's and early 1930's) and in less than a decade had made all other forms of full-strength armor steel obsolete when used for the primary protection of warships and, later, armored land vehicles - including all previous homogeneous metal armors because his new Chromium-Nickel-Steel was harder and tougher and thus more resistant than the older Nickel-Steel. It was originally combined with the cementing process, but a few face-hardened armors dispensed with cementing and successfully (or not) employed decremental hardening by itself.

Except for a final, post-hardening temper (not always used in early KC-type armors) and minor machining, the decremental hardening process was the last heat treatment applied to a KC-type face-hardened Chromium-Nickel-Steel armor plate. The plate was completely heat treated for optimum crystal structure, mechanically worked (hammered, rolled, and forged), cemented (if used), and shaped to as close to its final form as possible, then laid flat and packed around the edges with an insulating layer of sand or loam, so only its face and back surfaces were exposed. Any portions of the face where holes were to be cut were covered with thick insulation (usually asbestos) to reduce both the rates of heating and of cooling and thus prevent hardening when quenched. All temperatures and times used were carefully regulated using data from previous tests and production runs. The plate was run into an oven and raised slowly to an even red hot temperature below the CHT, then the face surface only was evenly blasted by flaming jets to raise it to a much higher white hot temperature and the plate allowed to soak in this condition for a specified time, depending on the plate size and thickness. The CHT would gradually move into the plate in a flat front and when the timer indicated that it had reached the desired depth (which varied considerably between manufacturers and date made over a wide range), the plate would be removed from the oven and either both the face and back would be sprayed with high pressure water cold (original Krupp technique) or the plate would first undergo dipping of the face in water and/or oil (British Vickers KC-type armor manufactured prior to World War I, for example) prior to the final water quench.

The result was a "decrementally hardened" or "deep" face layer of circa 500 Brinell (535 Brinell is the hardest I know of, and not in a normal production plate) just behind the cemented layer (if used), dropping off gradually in hardness to the level of the unhardened back in one of several ways, depending on the final face and back temperatures, the heating time, and the plate's metallurgical makeup. The original KC a/A armor had the deep face layer's hardness drop off with increasing depth in either a straight line or a "ski-slope" (a rapid, ramp-like drop in the middle of the face layer, but slow near the surface and near the unhardened back, like a child's slide) to about 350 Brinell at about 20% into the plate from the face surface. At this point the drop in hardness would become much steeper in another ski-slope - the transition layer - until it merged with the back layer's circa 225 Brinell hardness at 33-35% of the plate from the surface of the face, being a constant hardness from there to the back surface of the plate. Except for the sudden drop in hardness at the back of the cemented layer (if used) of from 650-700 Brinell to the circa 500 Brinell level, in no place was there any sudden change in hardness, though there was the relatively sudden change in the rate that the hardness was dropping at the boundary of the deep face layer and the transition layer.

The cemented layer/deep face layer combined is also called the undrillable portion of the face, while the entire cemented layer, deep face layer, and transition layer combined is called the chill or, loosely, the face, though this can be confused with the more limited scope deep face layer part of the undrillable portion of the chill. The width of the undrillable chill portion, the width of the transition layer, the hardness level where the transition layer begins, and the average hardness of the back layer were all changed slightly or considerably by later adopters of this process, including, after World War I, by Krupp itself. KC n/A armor increased the back layer hardness to 240 Brinell (the maximum used by anyone); increased the depth of the chill to 41% (very exact value); and adjusted the hardness drop in the undrillable chill layer to exactly match the transition layer drop so that the chill went from about 500 Brinell just behind the cemented layer in a single straight line or gradually-flattening curve, making the point where the transition layer starts invisible (it should be when the hardness drops to somewhere around 350 Brinell, depending on whose definition of "undrillable" you use!). This armor was tempered and was much superior to KC a/A in every way.

In other face-hardened plates, the deep face layer hardness stays constant for some depth and then begins to decrease in a ski-slope (deep face layer/transition layer boundary again somewhat arbitrarily specified) or in a very sudden drop (essentially no transition layer at all). U.S. Navy Bethlehem thin chill Class "A" armor was unusual in that it had essentially no deep face layer, with the drop in hardness being so steep behind the cemented layer that the cemented layer itself became the entire face and only a narrow, very steep transition layer not much thicker than the cemented layer connecting the cemented layer with the back layer, while U.S. Navy Midvale non-cemented Class "A" armor had a extreme chill depth of over 80% of the plate. The depth of the chill (actually the thickness of the unhardened back layer as a percent of the total plate thickness) is important not only for damaging projectiles, but also because the hard chill always fails by breaking (brittle fracture) and this is a surface phenomenon, as opposed to ductile tearing, where the entire volume of armor is distorted and pushed aside by the projectile as it penetrates (see scaling). Due to the difficulty of precisely controlling temperature and due to the use of the circa-1"-thick cemented surface layer in most KC-type armors, the minimum plate thickness for the deep face process described above was usually circa 4" (102mm) or greater (6" (15.2cm) for Japanese World War I-era Vickers cemented armor, for example), though Krupp and Witkowitz originally made their KC armors down to 3.2" (8cm) up through the end of World War I - even they increased the minimum to 4" after World War I.

Decremental hardening is an old process. For example, it was used in Japanese swords and daggers for centuries: A low-carbon steel rectangular block was imbedded in the coals of a furnace, heated white hot, removed, folded in half, hammered back to its original shape, and put back into the furnace. This was done many times, creating thousands of thin parallel layers of low-Carbon steel alternating with high-Carbon steel from the soot adhering to the surface of the metal prior to folding. The block was finally hammered to its final, stretched shape with these layers parallel to its wide sides and polished, with the back edge of the gently-backward-curving sword thick and flat, its thickness kept constant from handle to tip (the end of the swords was beveled, since stabbing was not the purpose of the sword), and the sword body smoothly tapering cross-wise in a wedge shape to a single, razor-sharp edge where the many layers were crushed into a single mass of high-Carbon steel.